- Topic

40k Popularity

20k Popularity

46k Popularity

17k Popularity

44k Popularity

20k Popularity

7k Popularity

4k Popularity

98k Popularity

29k Popularity

- Pin

- 🎊 ETH Deposit & Trading Carnival Kicks Off!

Join the Trading Volume & Net Deposit Leaderboards to win from a 20 ETH prize pool

🚀 Climb the ranks and claim your ETH reward: https://www.gate.com/campaigns/site/200

💥 Tiered Prize Pool – Higher total volume unlocks bigger rewards

Learn more: https://www.gate.com/announcements/article/46166

- 📢 ETH Heading for $4800? Have Your Say! Show Off on Gate Square & Win 0.1 ETH!

The next bull market prophet could be you! Want your insights to hit the Square trending list and earn ETH rewards? Now’s your chance!

💰 0.1 ETH to be shared between 5 top Square posts + 5 top X (Twitter) posts by views!

🎮 How to Join – Zero Barriers, ETH Up for Grabs!

1.Join the Hot Topic Debate!

Post in Gate Square or under ETH chart with #ETH Hits 4800# and #ETH# . Share your thoughts on:

Can ETH break $4800?

Why are you bullish on ETH?

What's your ETH holding strategy?

Will ETH lead the next bull run?

Or any o

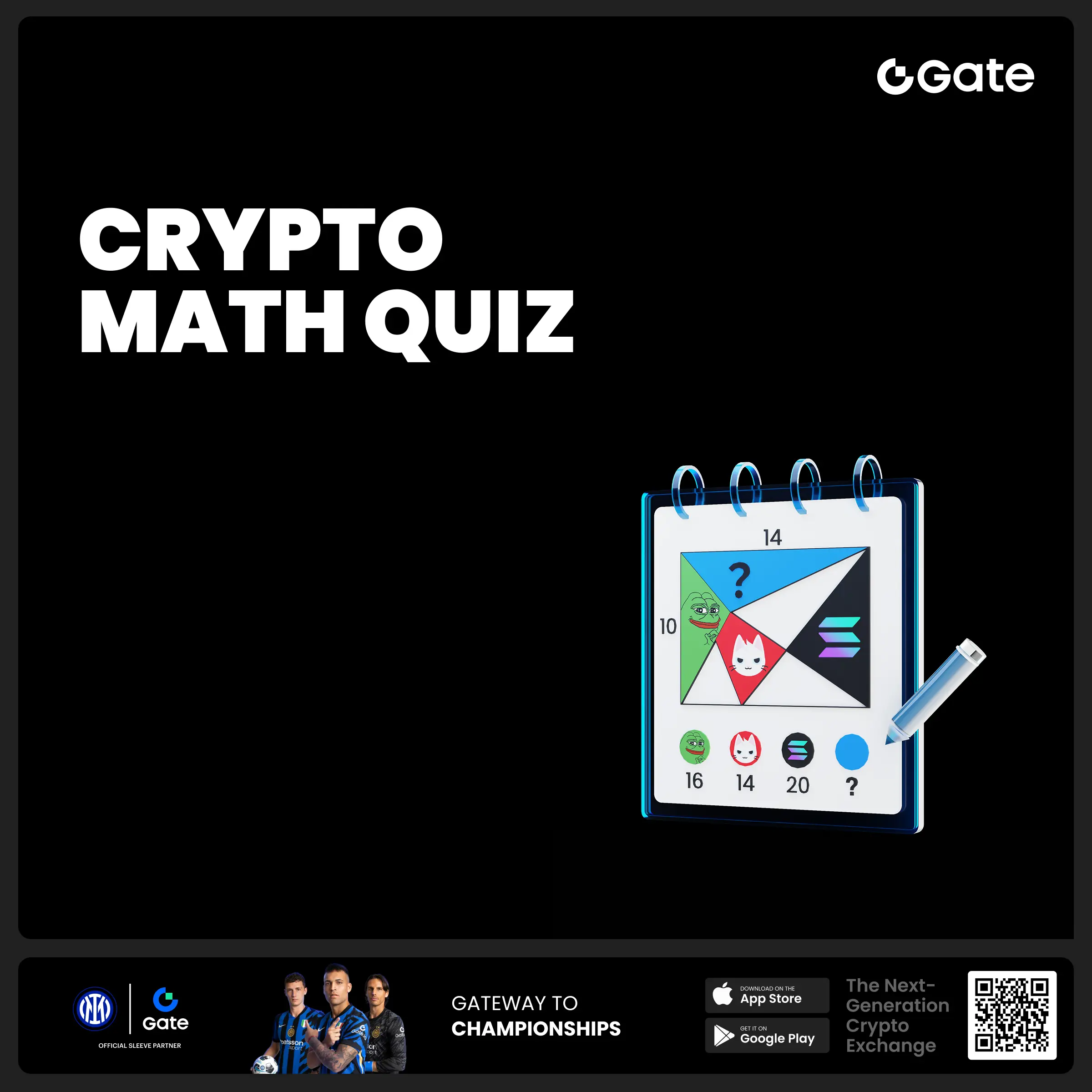

- 🧠 #GateGiveaway# - Crypto Math Challenge!

💰 $10 Futures Voucher * 4 winners

To join:

1️⃣ Follow Gate_Square

2️⃣ Like this post

3️⃣ Drop your answer in the comments

📅 Ends at 4:00 AM July 22 (UTC)

- 🎉 #Gate Alpha 3rd Points Carnival & ES Launchpool# Joint Promotion Task is Now Live!

Total Prize Pool: 1,250 $ES

This campaign aims to promote the Eclipse ($ES) Launchpool and Alpha Phase 11: $ES Special Event.

📄 For details, please refer to:

Launchpool Announcement: https://www.gate.com/zh/announcements/article/46134

Alpha Phase 11 Announcement: https://www.gate.com/zh/announcements/article/46137

🧩 [Task Details]

Create content around the Launchpool and Alpha Phase 11 campaign and include a screenshot of your participation.

📸 [How to Participate]

1️⃣ Post with the hashtag #Gate Alpha 3rd - 🚨 Gate Alpha Ambassador Recruitment is Now Open!

📣 We’re looking for passionate Web3 creators and community promoters

🚀 Join us as a Gate Alpha Ambassador to help build our brand and promote high-potential early-stage on-chain assets

🎁 Earn up to 100U per task

💰 Top contributors can earn up to 1000U per month

🛠 Flexible collaboration with full support

Apply now 👉 https://www.gate.com/questionnaire/6888

Mind cloning! Former OpenAI researcher lets AI imitate human thinking, and the real version of "Machinery" comes

**Source:**Xinzhiyuan

Guide: How far are we from "Machina"? A former OpenAI researcher lets AI clone thoughts, imitate human thinking, and act while thinking.

What will happen when AI has autonomous consciousness?

In "Machina", Ava uses human sympathy to induce human beings to be free by deception, and finally kills her "creator" Nathan.

And said, "It's a good movie, but I don't understand why everyone makes me watch it."

But we are still far away from the scene of "Ex Machina". GPT-5 may be under secret research and development, and making AI intelligent is still what scientists most want to do with their prehistoric efforts.

In their latest paper, they study "thought cloning" (TC) of agents.

Here, artificial intelligence learns to "think" and "act" like humans by imitating humans.

When AI has thoughts

Know that language is what differentiates humans from other living things.

Therefore, the researchers imagine that if agents could understand language, there would be many benefits.

Despite these benefits, AI agents rarely think, at least not in human language.

While neural networks can be thought of as internal vector activations of thinking, many hypothesize that there are specific benefits to thinking in discrete, symbolic languages.

This means that an agent that can think in language may learn faster, perform better, and generalize better than an agent that does not use language.

Jeff Clune and Shengran Hu believe that the most effective way to achieve this goal is to "make AI imitate human thinking".

An effective approach, therefore, is for the agent to learn from demonstrations of humans speaking their thoughts as they act.

This approach differs from existing work on planning with pretrained LLMs because these LLMs have not been trained on data of humans speaking their thoughts as they act, i.e. "thought data".

As for the source of the "thought data," the researchers selected YouTube videos and text recordings, some millions of hours, containing the thoughts behind people's actions, plans, decisions, and reprogramming.

In the paper, the researchers proposed a novel imitation learning framework "thought cloning". Among them, the agent not only learns human demonstration behaviors, such as behavior cloning, but also learns the way of thinking while human beings act.

In the thought-cloning training framework, the agent learns to generate thoughts at each time step and subsequently adjusts actions based on these thoughts.

At each time step, the agent receives as input an observation, a task, and a thought history. The upper-level components are responsible for idea generation, and the lower-level components generate actions based on these ideas.

Then, the generated thoughts and actions are compared with the ground truth in the demo dataset to calculate the loss.

While there may be different choices for the conditions of the upper and lower components, in this work, for a specific trajectory of length t in the mind dataset, the researchers minimized:

While the lower components can be trained from scratch, or adapted from existing linguistic conditional controllers in the target domain.

In the paper, the researchers conducted research based on two components of the BabyAI 1.1 model architecture.

The model leverages the memory-enhanced architecture LSTM to address part of the observability challenges. In addition, it employs FiLM for modality fusion, effectively combining visual and textual inputs.

Here, the author emphasizes that all models in this article are trained from scratch, but it is better to use pre-trained models in complex fields.

The picture below is an example of the BabyAI environment. The left picture contains items of various colors (balls, keys, boxes, doors).

The agent can see the 7×7 grid cells in front of it, which are blocked by walls and closed doors.

The task of the "mind-cloning" agent is to reach the purple box (highlighted) and start planning the route.

This process is especially like how Ava plans step by step, so that human beings will finally believe in and help themselves, and escape from the glass cage that has been imprisoned for a long time.

Experimental Results

The findings suggest that "thought cloning" is superior to behavioral cloning.

Furthermore, in the zero-shot and fine-tuning settings, mind cloning outperforms behavior cloning in out-of-distribution tasks.

When dangerous thoughts are detected, the agent can be terminated. In tests, Precriminal Intervention worked nearly flawlessly, showing its potential for AI safety.

"Mind cloning" not only makes artificial intelligence smarter, but also safer and easier to understand.

Because we can observe the agent's mind: (1) can more easily diagnose why things go wrong, (2) guide the agent by correcting its mind, (3) or prevent it from doing the planned unsafe matter.

about the author

Jeff Clune

Currently, Jeff Clune is an Associate Professor of Computer Science at the University of British Columbia. His research focuses on deep learning, including deep reinforcement learning.

Previously, he was also the head of the OpenAI research team, and a senior research manager and founding member of the Uber Artificial Intelligence Lab.

Currently a PhD student at the University of British Columbia, interested in deep learning, artificial intelligence generative algorithms.